Real-time Action Recognition

Artificial Intelligence

Computer Vision

ML4Health

Realtime Systems

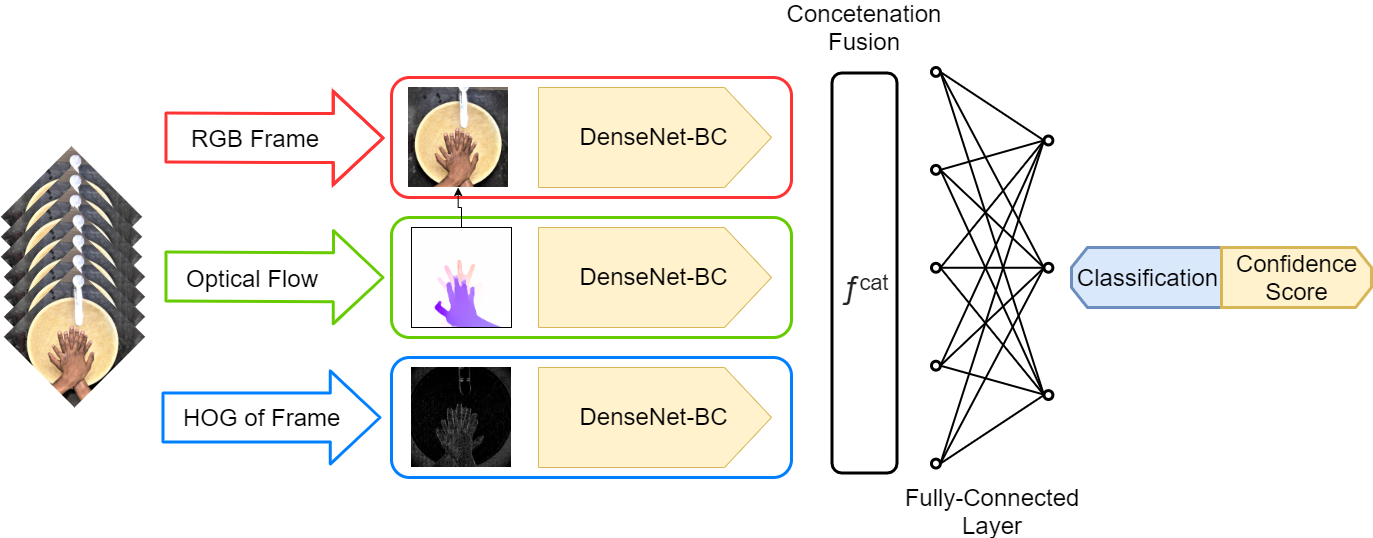

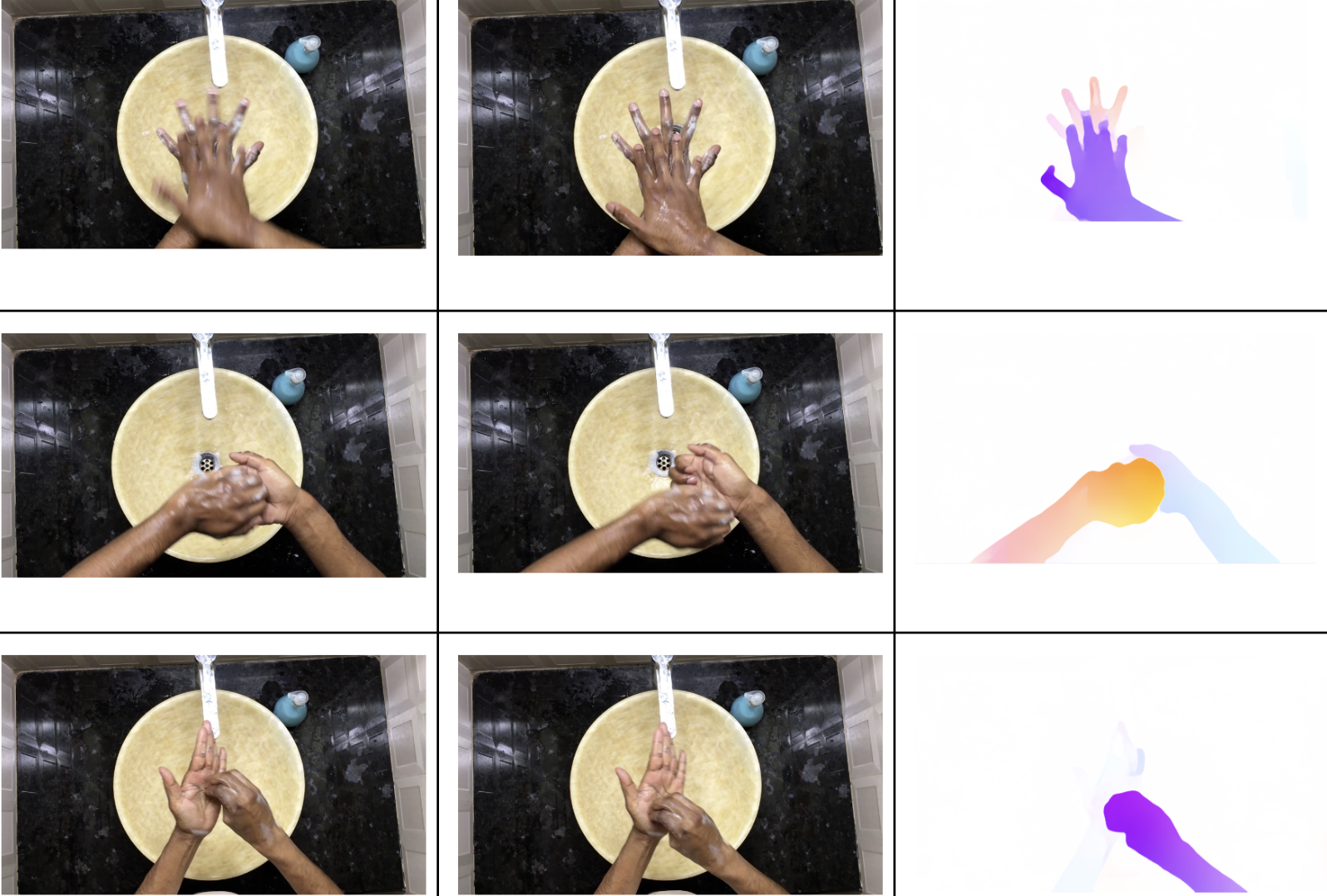

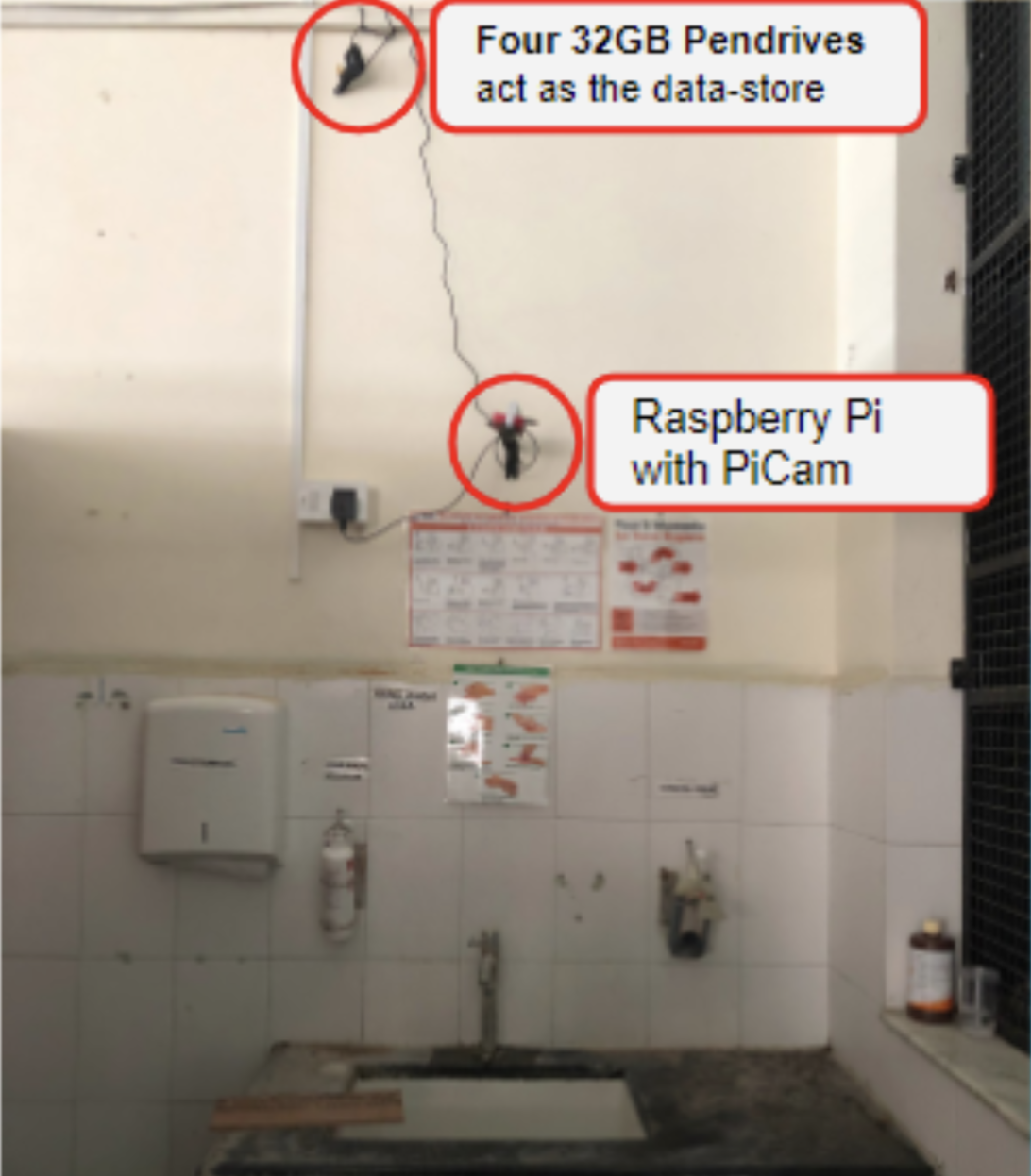

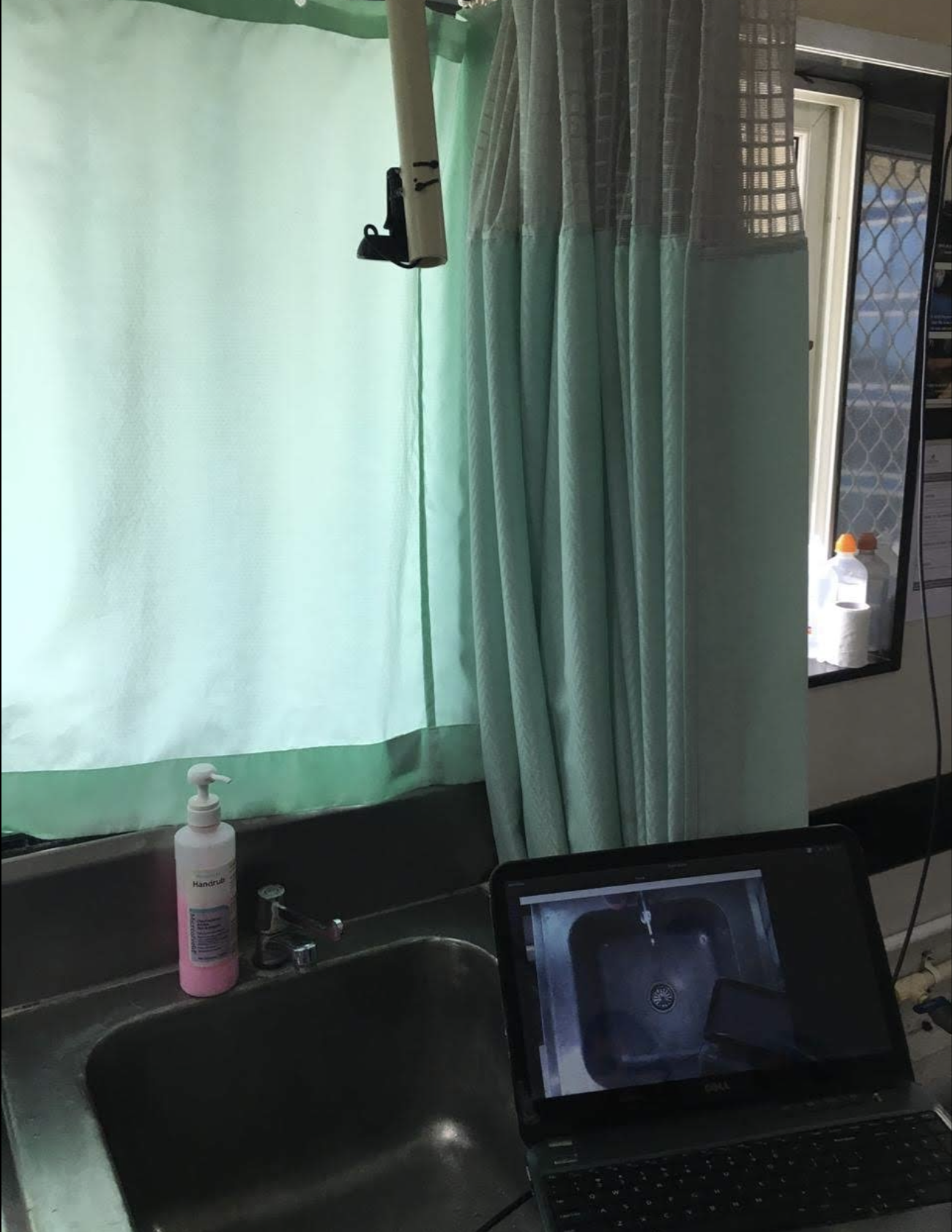

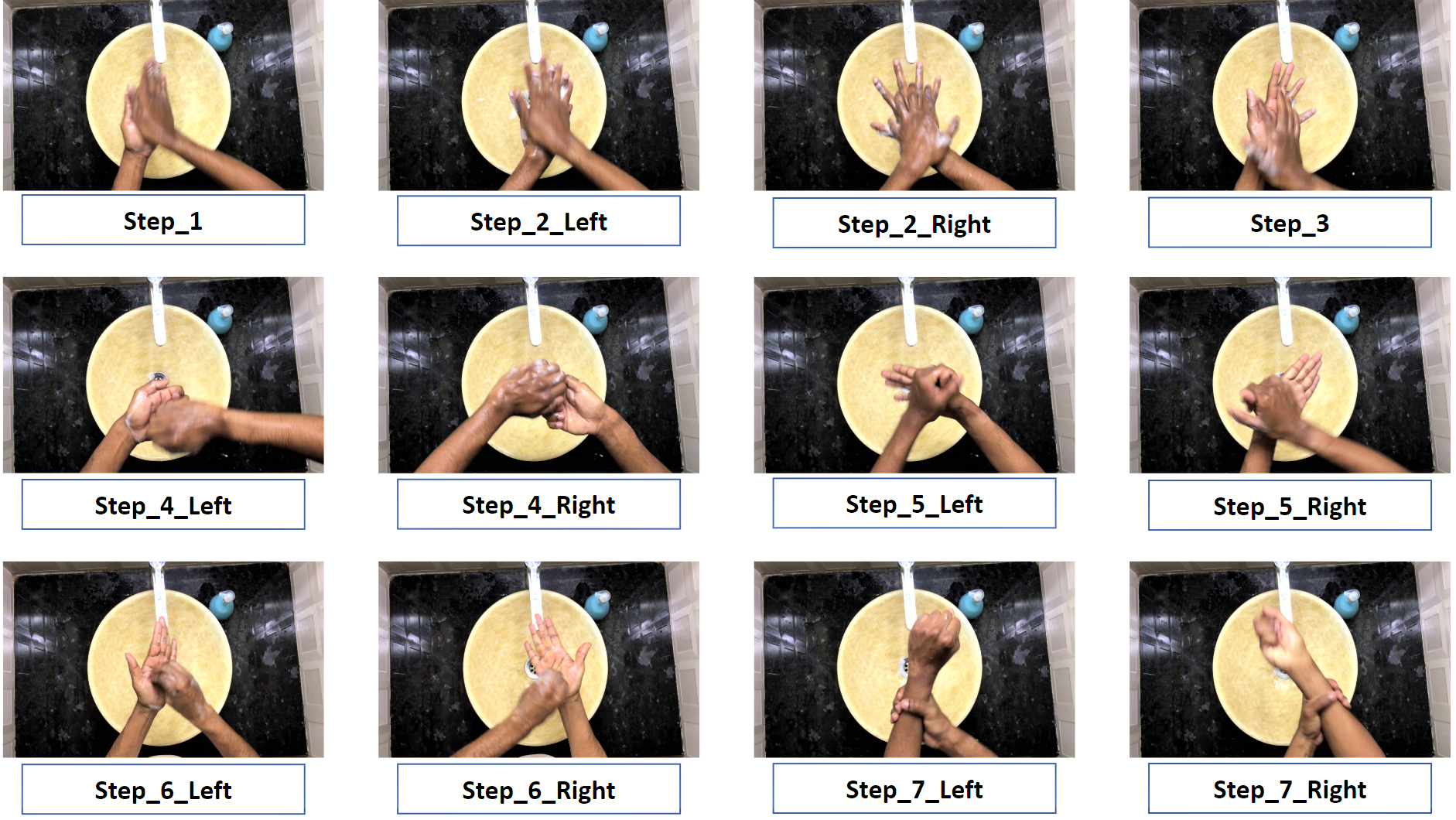

Used AI to address a real-world, potentially life-saving problem: minimizing Hospital Acquired Infections (HAI). HAIs are transmitted between patients due to improper hand sanitization of healthcare workers. In 2019, standard action recognition (AR) architectures struggled to differentiate between visually similar actions. Coincidentally, the actions of the hand sanitization steps are very similar in contrast to conventional AR benchmark data. As shown in~\cite{rl}, I addressed this drawback by including an object-level information stream using feature descriptors and extending the two-stream AR algorithm. Further, I optimized the system to work in real-time using network pruning and deployed it in local hospitals, leading to an 83% reduction in HAI. The project, currently patent-pending, received the Best Undergraduate Thesis award. For me, an important takeaway from this project was understanding the role of spatial, temporal, and object-level information in human and computer vision.

Technologies Used: Python, Tensorflow, RaspberryPi, opencv.

My Role:

- Led a team of 3 undergraduate student (including me).

- Designed the three-stream action recognition system.

- Manually recorded and created The Hand Wash Dataset.

- Collected training data in hospital wards.

- Ran trials in hospital ICU and wards to guage doctor feedback.

- Developed the frame buffer for real-time processing.

- Neural Network pruning to ensure real-time inference.

- Built the setup entire system (hardware and case) with 3D printing for final product deployment.