Unvoiced: Sign-languge to Speech

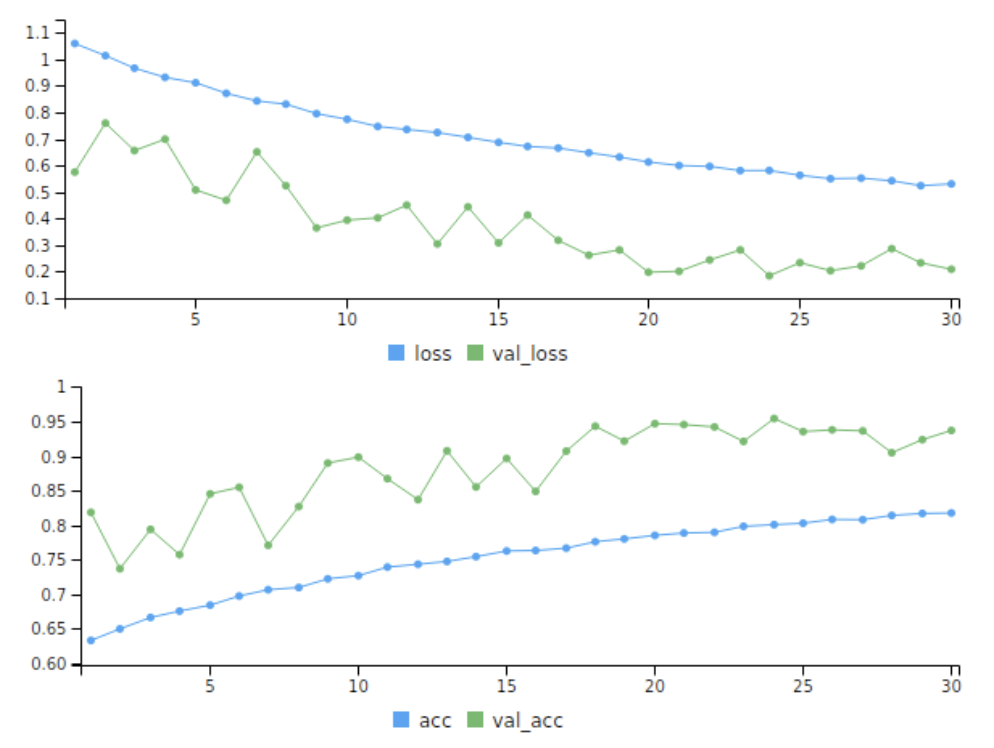

Artificial Intelligence

Computer Vision

AI4SocialGood

Realtime Systems

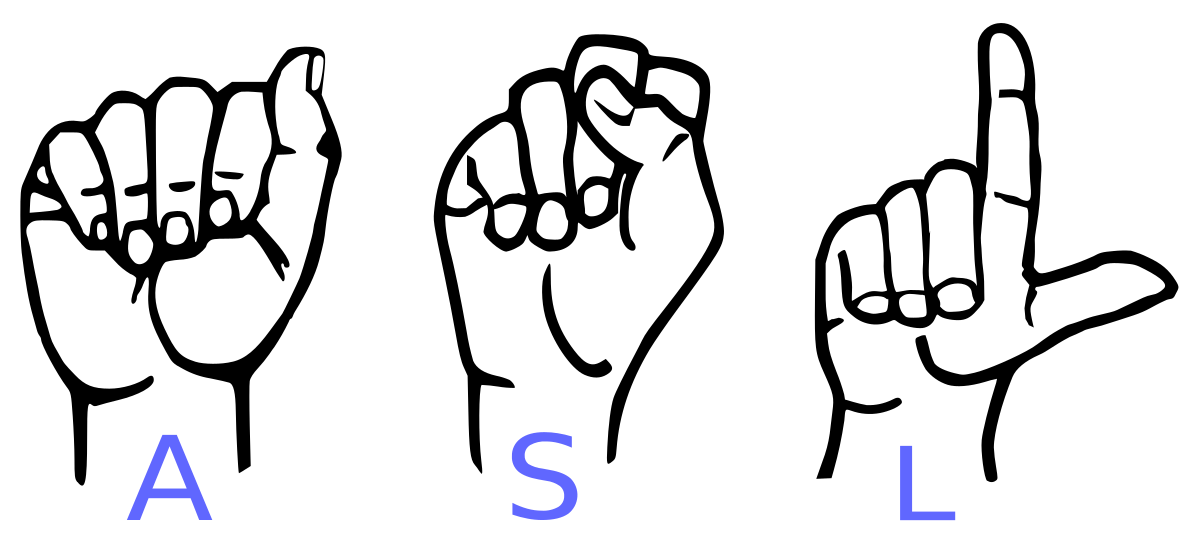

A real-time application, using a camera for a live video feed, and in the live stream, detect alphabets signed by the sign language speaker and convert that into text and further into speech, to aid in communication with people who have trouble with spoken language due to a disability or condition.

Technologies Used: Python, Tensorflow, opencv.

My Role:

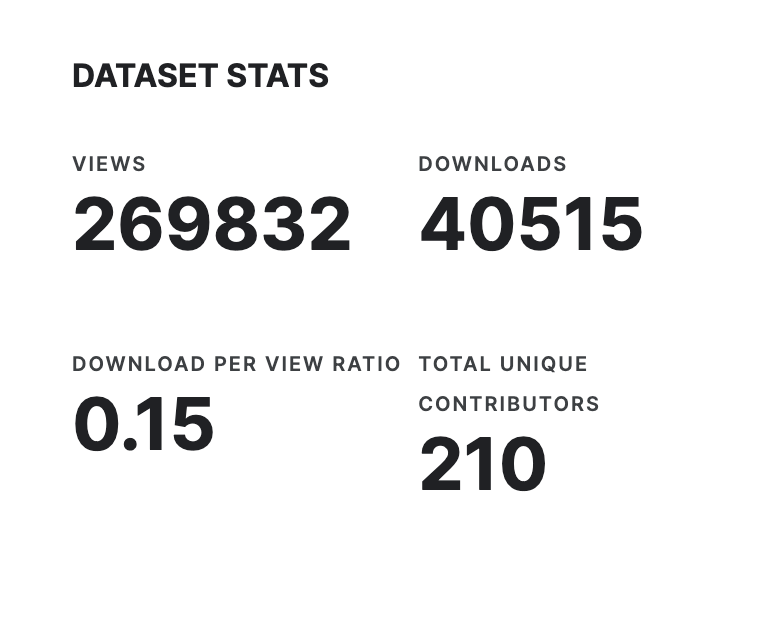

- Created the American Sign Language Alphabet Dataset, which has 75+ citations and over 38,000 downloads.

- Developed a web-application and deployed the system for real-time inference.

- Manually recorded multiple students to create the ASL Dataset with over 30,000 images.